Speech & Voice User Interfaces

Created for a series of smart display products to make use of Hisense voice recognition technology. My contributions include design and prototype visual audio interactions, motion, character animations, and multiple high-fidelity prototypes.

Conversation Loop

Making the experience as natural and responsive as possible, mimicking a chat conversation, and creating a dynamic-responsive animation that carefully adapted to the inputs and outputs of the user, like reacting when pressing the mic button, bubble growing as the user speaks word by word, showing loading states, responses, etc.

Audio Feedback

Initial animation in After Effects, exported dynamically to JSON using Lottie. It was used as a design reference and fallback asset. Later I prototyped a dynamic animation that worked using the computer microphone input. The developer used the prototype code to further bring the design into implementation.

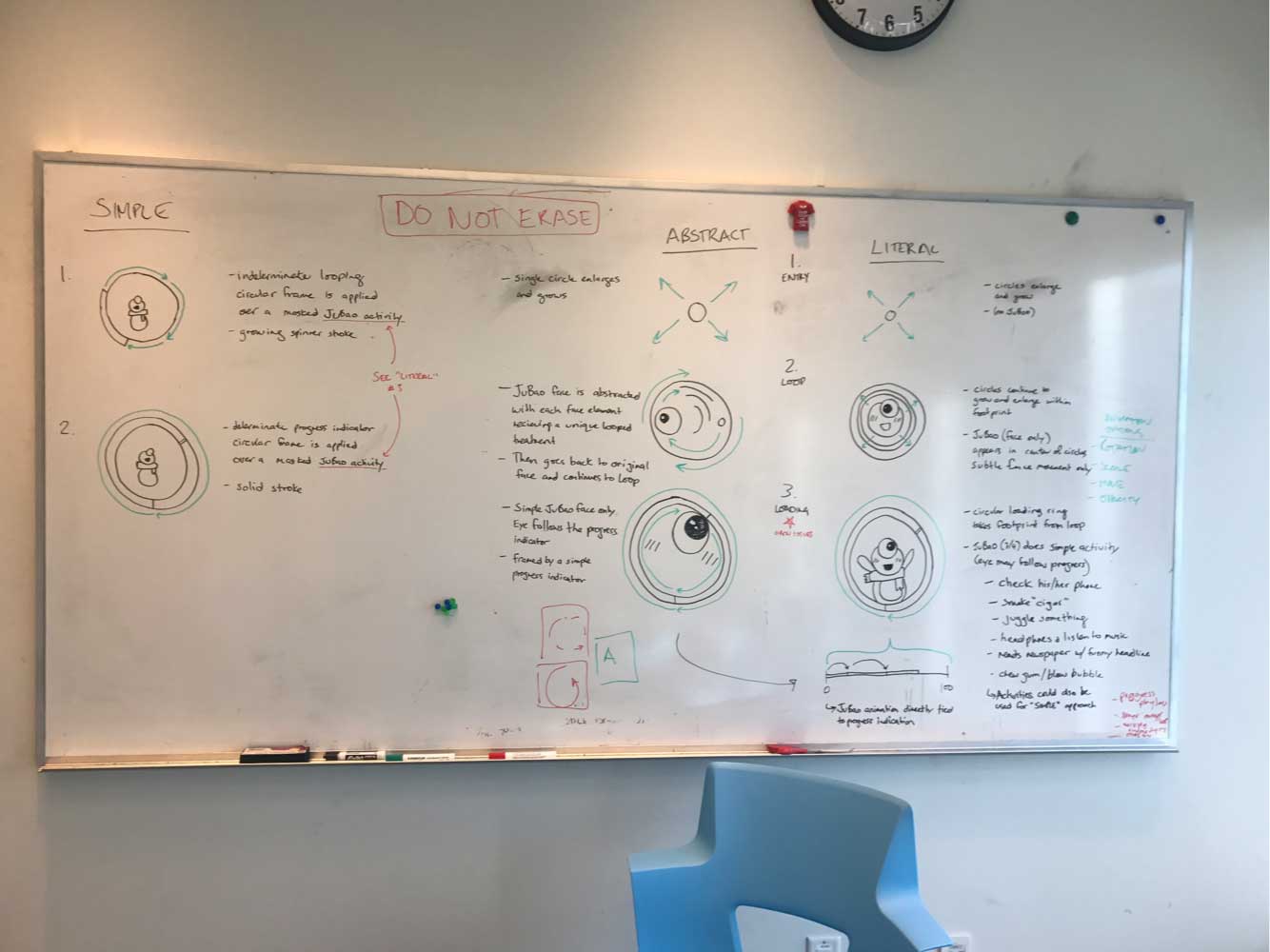

Avatar Animation

I animated the Hisense mascot character through multiple emotions, exported dynamic files with Lottie, and built a development-ready animation library. I also built a Tester tool for the design team to preview different animations in order to visualize animations and chain sequences for user interaction.

Prototyping

I worked on several prototypes merging the previously mentioned key components and showcasing the user experience through the different steps of user interactions and system responses.

High-Fidelity Prototype

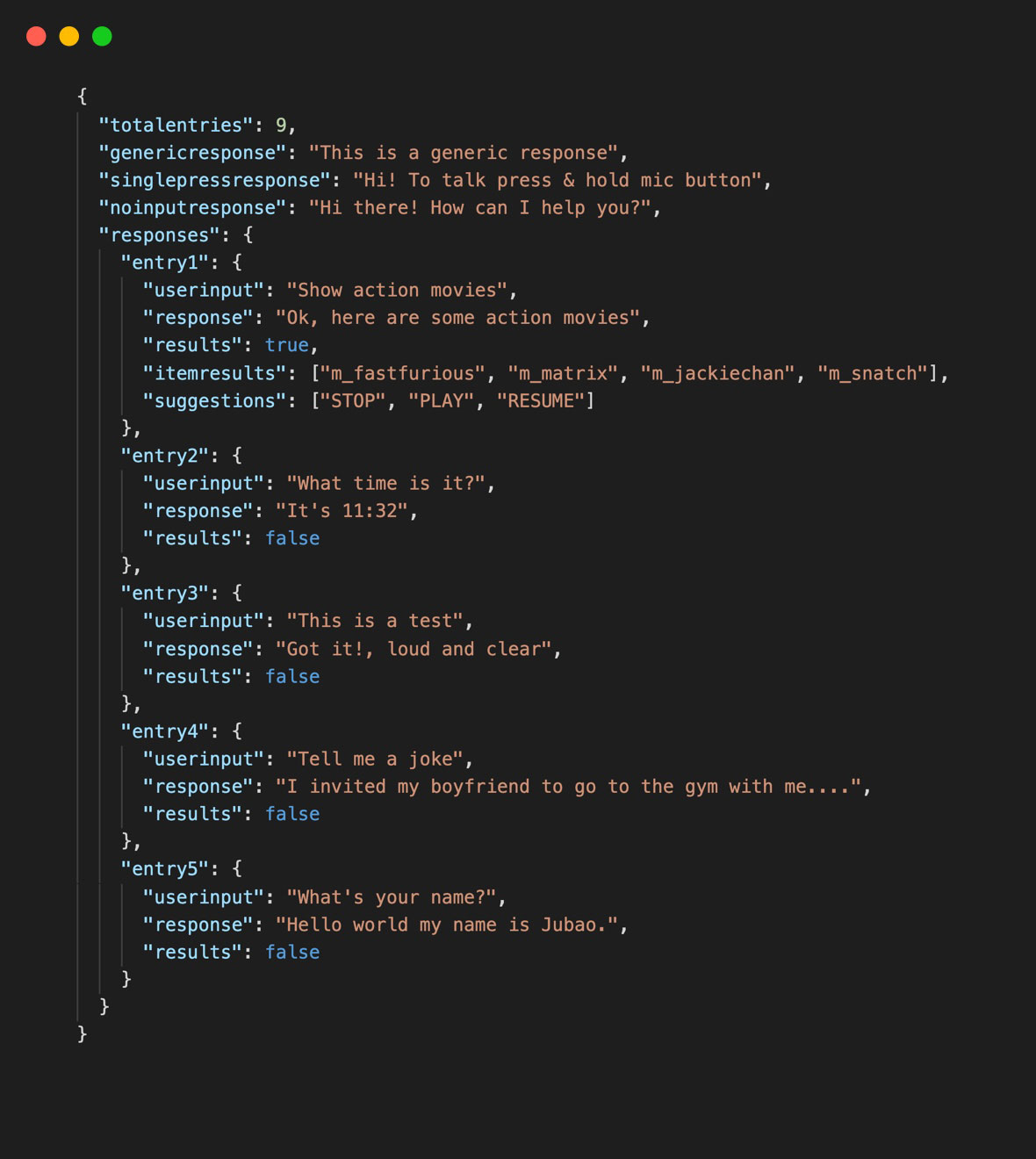

This Voice-driven prototype showcased an end-product experience during early development, helped establishing the vision and user-testing the design before implementation.

Hooking the prototype to Web Speech API allowed me to use real voice commands and personalize user-testing by customizing the commands and responses through an external file.

I hacked together a remote + microphone hardware interaction so the testing experience felt as close to real as possible.